前言

Apache Dubbo是一款高性能、轻量级的开源 Java 服务框架,提供了六大核心能力:面向接口代理的高性能RPC调用,智能容错和负载均衡,服务自动注册和发现,高度可扩展能力,运行期流量调度,可视化的服务治理与运维。

简单介绍后,接下来跟大家分享下实际项目使用中出现的Dubbo线程池关闭导致业务无法正常响应的Bug。

现象分析及解决方案

环境信息

Dubbo version: 2.7.12

Operating System version: centos

Java version: 8现象

Dubbo版本从2.7.7升级至2.7.12后,provider下线造成部分consumer线程池Terminated,继而请求无法正常响应,造成严重的产线事故。

分析

通过在开发环境模拟,发现在压测场景下(模拟产线不断处理业务)会出现该问题。出现的时候伴随日志信息如下:

An exception '{}' [enable DEBUG level for full stacktrace] was thrown by a user handler's exceptionCaught() method while handling the following exception:

org.apache.dubbo.remoting.ExecutionException: class org.apache.dubbo.remoting.transport.dispatcher.all.AllChannelHandler error when process received event .

at org.apache.dubbo.remoting.transport.dispatcher.all.AllChannelHandler.received(AllChannelHandler.java:68)

at org.apache.dubbo.remoting.exchange.support.header.HeartbeatHandler.received(HeartbeatHandler.java:90)

at org.apache.dubbo.remoting.transport.MultiMessageHandler.received(MultiMessageHandler.java:52)

at org.apache.dubbo.remoting.transport.AbstractPeer.received(AbstractPeer.java:147)

at org.apache.dubbo.remoting.transport.netty4.NettyClientHandler.channelRead(NettyClientHandler.java:83)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at io.netty.handler.timeout.IdleStateHandler.channelRead(IdleStateHandler.java:286)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at io.netty.handler.codec.ByteToMessageDecoder.fireChannelRead(ByteToMessageDecoder.java:323)

at io.netty.handler.codec.ByteToMessageDecoder.channelRead(ByteToMessageDecoder.java:297)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at io.netty.channel.AbstractChannelHandlerContext.fireChannelRead(AbstractChannelHandlerContext.java:340)

at io.netty.channel.DefaultChannelPipeline$HeadContext.channelRead(DefaultChannelPipeline.java:1434)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:362)

at io.netty.channel.AbstractChannelHandlerContext.invokeChannelRead(AbstractChannelHandlerContext.java:348)

at io.netty.channel.DefaultChannelPipeline.fireChannelRead(DefaultChannelPipeline.java:965)

at io.netty.channel.nio.AbstractNioByteChannel$NioByteUnsafe.read(AbstractNioByteChannel.java:163)

at io.netty.channel.nio.NioEventLoop.processSelectedKey(NioEventLoop.java:648)

at io.netty.channel.nio.NioEventLoop.processSelectedKeysOptimized(NioEventLoop.java:583)

at io.netty.channel.nio.NioEventLoop.processSelectedKeys(NioEventLoop.java:500)

at io.netty.channel.nio.NioEventLoop.run(NioEventLoop.java:462)

at io.netty.util.concurrent.SingleThreadEventExecutor$5.run(SingleThreadEventExecutor.java:897)

at io.netty.util.concurrent.FastThreadLocalRunnable.run(FastThreadLocalRunnable.java:30)

at java.lang.Thread.run(Thread.java:745)

Caused by: java.util.concurrent.RejectedExecutionException: Task org.apache.dubbo.remoting.transport.dispatcher.ChannelEventRunnable@7d7d66e4 rejected from java.util.concurrent.ThreadPoolExecutor@15527cbc[Terminated, pool size = 0, active threads = 0, queued tasks = 0, completed tasks = 93]

at java.util.concurrent.ThreadPoolExecutor$AbortPolicy.rejectedExecution(ThreadPoolExecutor.java:2047)

at java.util.concurrent.ThreadPoolExecutor.reject(ThreadPoolExecutor.java:823)

at java.util.concurrent.ThreadPoolExecutor.execute(ThreadPoolExecutor.java:1369)

at org.apache.dubbo.remoting.transport.dispatcher.all.AllChannelHandler.received(AllChannelHandler.java:62)

... 28 common frames omitted从以上信息看发现线程池被关闭了。联想到此次升级的目的,是为了解决Dubbo每个端口创建200个线程导致创建线程过多的问题(对于我们的网关而言会达到几千个),大胆猜测是升级后Dubbo的Bug导致的。因此到GitHub提交了Issue。

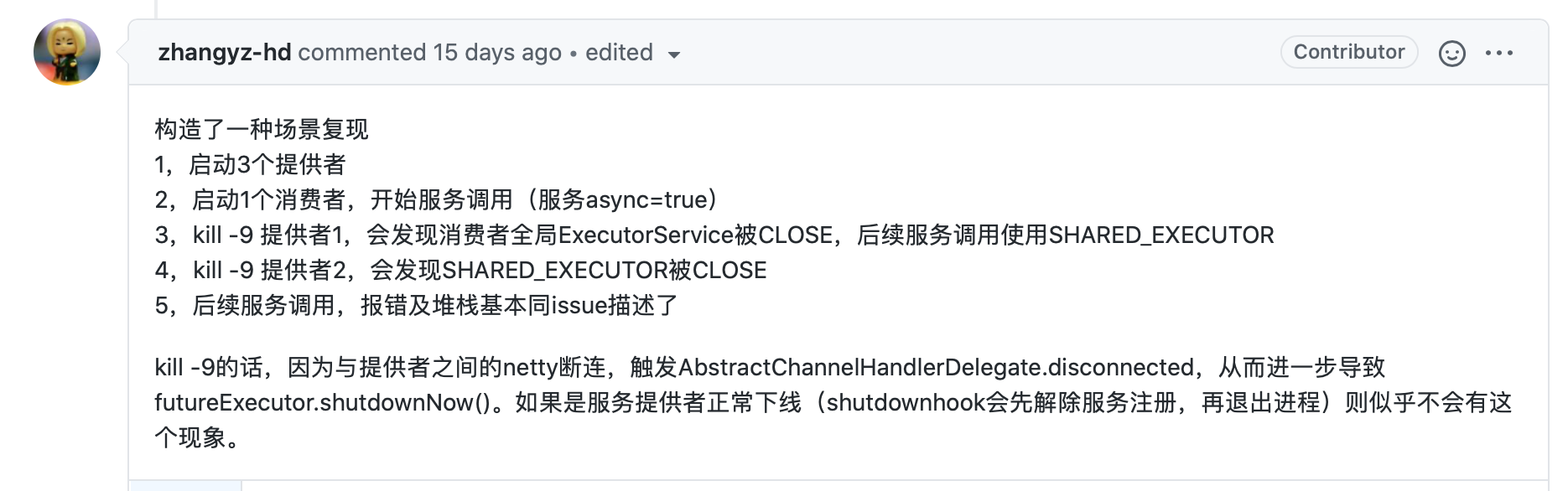

很快,有Contributor进行了回应,确认在以下场景可以复现:

解决方案

考虑到官方修复速度比较慢,我们基于Dubbo的SPI机制重新实现ExecutorRepository,在检测到线程池被关闭的情况下马上创建共享线程池,具体如下:

import lombok.extern.slf4j.Slf4j;

import org.apache.dubbo.common.URL;

import org.apache.dubbo.common.extension.ExtensionLoader;

import org.apache.dubbo.common.threadpool.ThreadPool;

import org.apache.dubbo.common.threadpool.manager.ExecutorRepository;

import org.apache.dubbo.common.threadpool.manager.Ring;

import org.apache.dubbo.common.utils.ExecutorUtil;

import org.apache.dubbo.common.utils.NamedThreadFactory;

import java.util.Map;

import java.util.concurrent.*;

import static org.apache.dubbo.common.constants.CommonConstants.*;

/**

* 升级dubbo版本到2.7.12 处理如下bug

* https://github.com/apache/dubbo/pull/7109

* https://github.com/apache/dubbo/pull/6959

* <p>

* 但是造成服务蓝绿发布下线服务时候,dubbo全局线程池 被关闭,导致节点请求失败 问题

* https://github.com/apache/dubbo/issues/8172

*

* 临时解决方案:

* 基于全局线程池;线程池关闭后重新创建线程池;

*

* <p>

* copy from org.apache.dubbo.common.threadpool.manager.DefaultExecutorRepository

* <p>

* Consider implementing {@code Licycle} to enable executors shutdown when the process stops.

*/

/**

* Consider implementing {@code Licycle} to enable executors shutdown when the process stops.

*/

@Slf4j

public class CustomExecutorRepository implements ExecutorRepository {

private int DEFAULT_SCHEDULER_SIZE = Runtime.getRuntime().availableProcessors();

private final ExecutorService SHARED_EXECUTOR = Executors.newCachedThreadPool(new NamedThreadFactory("DubboSharedHandler", true));

private Ring<ScheduledExecutorService> scheduledExecutors = new Ring<>();

private ScheduledExecutorService serviceExporterExecutor;

private ConcurrentMap<String, ConcurrentMap<String, ExecutorService>> data = new ConcurrentHashMap<>();

public SisyphusExecutorRepository() {

for (int i = 0; i < DEFAULT_SCHEDULER_SIZE; i++) {

ScheduledExecutorService scheduler = Executors.newSingleThreadScheduledExecutor(new NamedThreadFactory("Dubbo-framework-scheduler"));

scheduledExecutors.addItem(scheduler);

}

serviceExporterExecutor = Executors.newScheduledThreadPool(1, new NamedThreadFactory("Dubbo-exporter-scheduler"));

}

/**

* Get called when the server or client instance initiating.

*

* @param url

* @return

*/

@Override

public synchronized ExecutorService createExecutorIfAbsent(URL url) {

Map<String, ExecutorService> executors = data.computeIfAbsent(EXECUTOR_SERVICE_COMPONENT_KEY, k -> new ConcurrentHashMap<>());

//issue-7054:Consumer's executor is sharing globally, key=Integer.MAX_VALUE. Provider's executor is sharing by protocol.

String consumerKey = getConsumerExecutorKey(url);

String portKey = CONSUMER_SIDE.equalsIgnoreCase(url.getParameter(SIDE_KEY)) ? consumerKey : String.valueOf(url.getPort());

ExecutorService executor = executors.computeIfAbsent(portKey, k -> createExecutor(url));

// If executor has been shut down, create a new one

if (executor.isShutdown() || executor.isTerminated()) {

executors.remove(portKey);

executor = createExecutor(url);

executors.put(portKey, executor);

}

return executor;

}

@Override

public ExecutorService getExecutor(URL url) {

Map<String, ExecutorService> executors = data.get(EXECUTOR_SERVICE_COMPONENT_KEY);

/**

* It's guaranteed that this method is called after {@link #createExecutorIfAbsent(URL)}, so data should already

* have Executor instances generated and stored.

*/

if (executors == null) {

log.warn("No available executors, this is not expected, framework should call createExecutorIfAbsent first " +

"before coming to here.");

return null;

}

String consumerKey = getConsumerExecutorKey(url);

String portKey = CONSUMER_SIDE.equalsIgnoreCase(url.getParameter(SIDE_KEY)) ? consumerKey : String.valueOf(url.getPort());

ExecutorService executor = executors.get(portKey);

// 线程池关闭重新创建

if (executor == null || executor.isShutdown() || executor.isTerminated()) {

long createStart = System.currentTimeMillis();

executor = createExecutorIfAbsent(url);

long createEnd = System.currentTimeMillis();

log.warn("Executor for " + url + " is shutdown. recreate global executor, cost :{}", createEnd - createStart);

}

return executor;

}

@Override

public void updateThreadpool(URL url, ExecutorService executor) {

try {

if (url.hasParameter(THREADS_KEY)

&& executor instanceof ThreadPoolExecutor && !executor.isShutdown()) {

ThreadPoolExecutor threadPoolExecutor = (ThreadPoolExecutor) executor;

int threads = url.getParameter(THREADS_KEY, 0);

int max = threadPoolExecutor.getMaximumPoolSize();

int core = threadPoolExecutor.getCorePoolSize();

if (threads > 0 && (threads != max || threads != core)) {

if (threads < core) {

threadPoolExecutor.setCorePoolSize(threads);

if (core == max) {

threadPoolExecutor.setMaximumPoolSize(threads);

}

} else {

threadPoolExecutor.setMaximumPoolSize(threads);

if (core == max) {

threadPoolExecutor.setCorePoolSize(threads);

}

}

}

}

} catch (Throwable t) {

log.error(t.getMessage(), t);

}

}

@Override

public ScheduledExecutorService nextScheduledExecutor() {

return scheduledExecutors.pollItem();

}

@Override

public ScheduledExecutorService getServiceExporterExecutor() {

return serviceExporterExecutor;

}

@Override

public ExecutorService getSharedExecutor() {

log.info("using shared executor");

return SHARED_EXECUTOR;

}

@Override

public void destroyAll() {

log.info("destroy all");

long start = System.currentTimeMillis();

data.values().forEach(executors -> {

if (executors != null) {

executors.values().forEach(executor -> {

if (executor != null && !executor.isShutdown()) {

ExecutorUtil.shutdownNow(executor, 100);

}

});

}

});

data.clear();

long end = System.currentTimeMillis();

log.info("destroy all cost :" + (end - start));

}

private ExecutorService createExecutor(URL url) {

return (ExecutorService) ExtensionLoader.getExtensionLoader(ThreadPool.class).getAdaptiveExtension().getExecutor(url);

}

private String getConsumerExecutorKey(URL url) {

// return url.getIp() + "-" + url.getPort();

return "global";

}

}扩展后重新发版,问题得到了解决。